Check out this webpage for a thorough overview of running mixed models in R. I wanted to pull out a few pieces of information from this article that I found useful. (If you aren’t familiar with mixed models, the following may not be too meaningful for you.)

Nested vs. Crossed Random Effects

“Before you proceed, you will also want to think about the structure of your random effects. Are your random effects nested or crossed? In the case of my study, the random effects are nested, because each observer recorded a certain number of trials, and no two observers recorded the same trial, so here Test.ID is nested within Observer. But say I had collected wasps that clustered into five different genetic lineages. The ‘genetics’ random effect would have nothing to do with observer or arena; it would be orthogonal to these other two random effects. Therefore this random effect would be crossed to the others.”

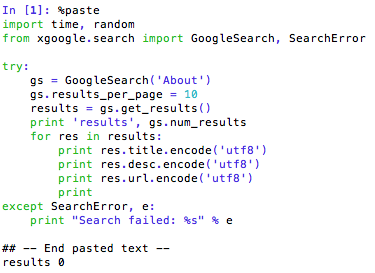

Identifying the Probability Distribution that Fits the Data

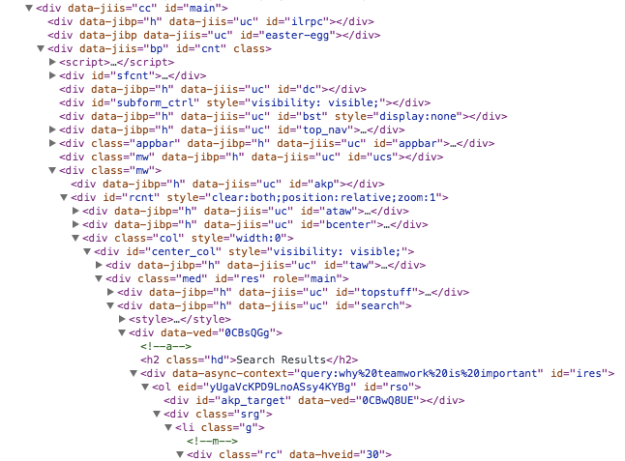

The author of the page plotted the data along various types of distributions (e.g., binomial, Poisson, gamma, log-normal).

“The y axis represents the observations and the x axis represents the quantiles modeled by the distribution. The solid red line represents a perfect distribution fit and the dashed red lines are the confidence intervals of the perfect distribution fit. You want to pick the distribution for which the largest number of observations falls between the dashed lines. In this case, that’s the lognormal distribution, in which only one observation falls outside the dashed lines. Now, armed with the knowledge of which probability distribution fits best, I can try fitting a model.”

Failure to Converge

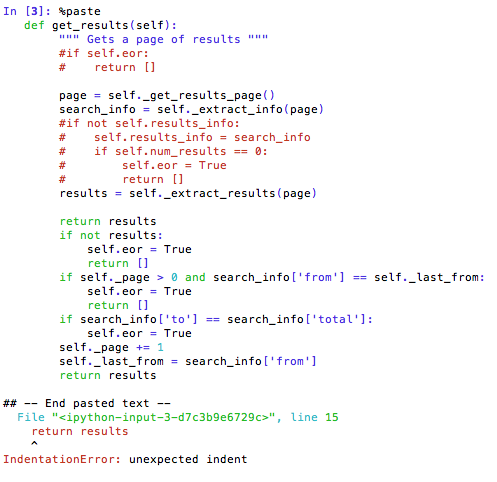

I often encountered the error “failure to converge” when running mixed models. This article describes what now seems like an obvious way to deal with the failure to converge – systematically drop effects from the model and compare the performance. I am appreciative of how much I’ve learned and grown in my statistics knowledge because of my exposure to data science over the last year and a half.

“There is one complication you might face when fitting a linear mixed model. R may throw you a “failure to converge” error, which usually is phrased “iteration limit reached without convergence.” That means your model has too many factors and not a big enough sample size, and cannot be fit. Unfortunately, I don’t have any data that actually fail to converge on a model that I can show you, but let’s pretend that last model didn’t converge. What you should then do is drop fixed effects and random effects from the model and compare to see which fits the best. Drop fixed effects and random effects one at a time. Hold the fixed effects constant and drop random effects one at a time and find what works best. Then hold random effects constant and drop fixed effects one at a time. Here I have only one random effect, but I’ll show you by example with fixed effects.”

———

This article goes through more of the “math” of mixed models. I’m putting it here for now so I can look through it in more detail later.